Hello, World!

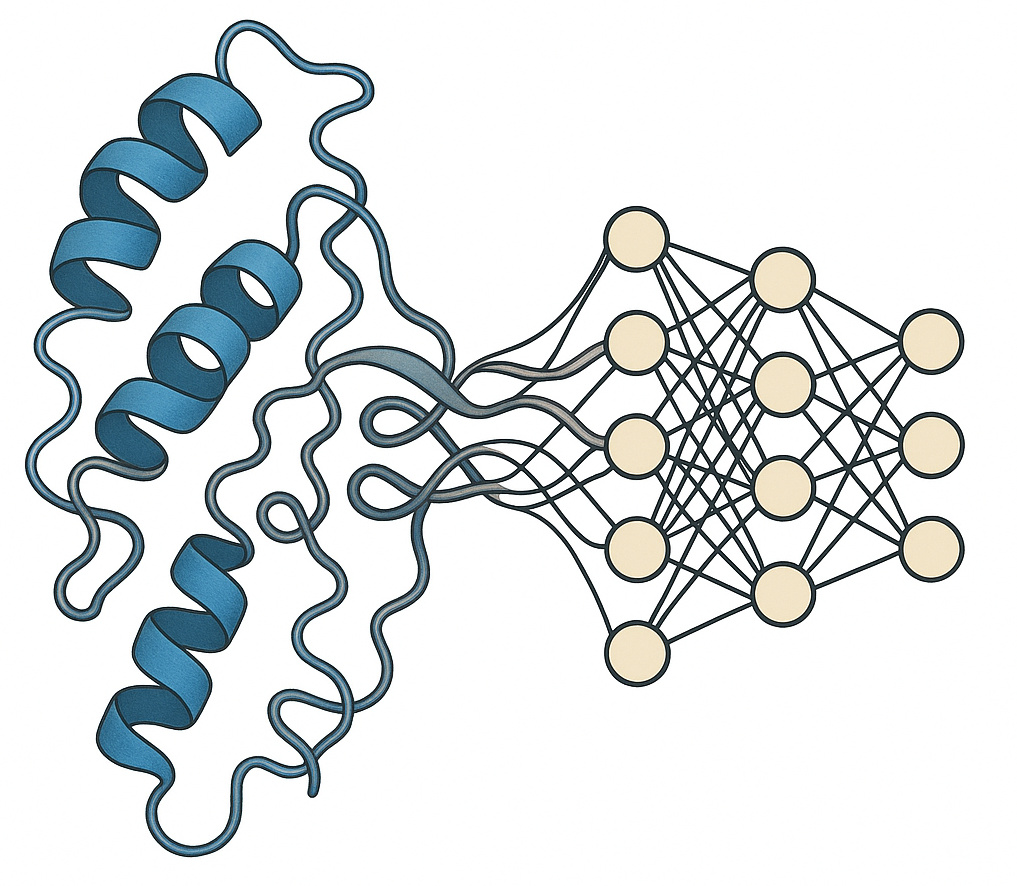

This is the personal webpage of Tuan ([tʰwɑ̃n], fullname: Tuan Q. Dinh). I am currently a postdoc fellow working on foundation models and their development for scientific discovery, especially for proteins and human genetics. I am jointly supervised by Prof. Vasilis Ntranos at UCSF and the Data Sciences group at Maze Therapeutics.

Research interests: AI/ML and AI4Science, with the current focus being modular deep learning for computational biology.

![]() When time rushes by, I read/translate poems, seeking the ones that speak to me, e.g.,

When time rushes by, I read/translate poems, seeking the ones that speak to me, e.g., I chase the mist where whispers lie, The wise take wing beneath the sky.

Education: Tuan obtained Ph.D. in Computer Sciences (minor in Statistics) with Prof. Kangwook Lee, studying modular neural networks built on pre-trained models. Previously, Tuan completed the M.S. with Prof. Vikas Singh and the B.E. with Prof. Tru Cao, working on AI systems for disease forecast and healthcare.

News

05.25: Submitted our work on ESM for variant effect prediction05.25: TabFlex is accepted to ICML 2025 (Spotlight). Thanks Yuchen and colaborators at Microsoft.

05.25: The two collaborated works on protein language models are under review

... see all News